Floating Point Compression - How Small Can We Get?

Editors prefix: I originally penned this in September, but got cold feet that I had somehow missed something crucial in my investigations, impostor syndrome reared its head, and I binned the post. But after being involved in a tweet thread about the compressibility of these tables, I’m gonna just publish my potentially flawed work incase someone notices something obvious!

Having implemented my fair share of drivers, and worked on a few languages too, one thing that has always interested me is how can we check that math functions are correct. OpenCL C has an entire reference implementation of the math functions, but if I recall on platforms without 80-bit floats, testing that double precision results matched meant adding 0.5 ULP to ensure your result matched. Not ideal!

But taking all that aside, if you have reference floating-point functions that you want to compare against that do use the floating point units on your machine, there are some fun things that can cause discrepencies. Like if you happen to have any library that uses fast-math, it can set the floating point control registers.

The way I’ve seen math function checking done correctly is by comparing the results against a soft-float library that has predictable results on all platforms. But how can we be sure that two platforms with the same reference code produce the same results? Ideally we’d have to test two different platforms and compare each result matches. To do this without having to have two machines running concurrently, I wondered if it was possible to use MPFR to generate every possible result of a math function where we can test the entire range before the heat death of the universe? A 32-bit single input function like cos would be possible to test, but every input to a 64-bit cos would not, or a two input 32-bit function like pow would also not be fully testable.

The Raw Data⌗

So each result value from cos for a 32-bit input is 32-bits and there are 4,294,967,296 resulting values - meaning the raw uncompressed data would be 16GB. Not ideal for sending between machines! This data is the output from every representable floating-point number, starting at 0x00000000 (positive zero), all the way to 0xffffffff (a negative NaN).

ZSTD To the Rescue?⌗

My first thought was to use ZSTD on a high compression level to compress the results, which resulted in a 2.9GB result file, which is 5.5x smaller. So obviously better, but still a lot of data to ship around.

Ok, so compression alone is not enough to fix this, can we use some knowledge of the resulting data to help the compression achieve a better result?

Some Data Gathering⌗

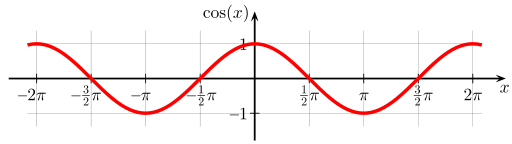

So lets look at the graph of cos:

We can see that in the range [-1..1] each next value should be close to the previous one. And if you didn’t know, over half the entire range of floating-point numbers lie in that range. So for half the input data the resulting values should be close, and my guess was these would be 1 or 2 bits out on average. So the next thing I did was gather some stats on the entire range of cos:

- The sign of the output flips between positive and negative in 20.36% of the consecutive outputs. Given that the high exponent inputs will result in wildly irregular outputs, my guess is these are primarily in these high exponent cases.

- The average exponent difference between outputs is 28.01. Again, I would expect this to be the high outputs that causes this.

- If we delta encode the mantissa (by subtracting the previous mantissa from the current one), we need 2.25 bytes on average to encode it (versus 3 bytes required in the floating-point standard).

- And if we xor the mantissa with the previous one, we need 2.30 bytes on average.

Xoring⌗

So my first attempt was to just store the previous output from cos, and xor the current one with the previous:

let next = current ^ previous;

The result? 2.6GB when I xor then ZSTD the result, a 0.3GB saving against raw ZSTDing. Better but not good enough.

Delta Encoding⌗

Next attempt was to just delta encode the entire number. Delta encoding just means we subtract the previous from the current, and store the ‘delta’ between them instead of the original.

let next = current - previous;

This took 3.8GB - ouch! My guess is that with the sign flipping 20% of the time, in those cases the delta will be huge between the two numbers and we’ll lose any benefit from the runs being close.

Varint Encoding the Mantissa⌗

At this point - I was running out of ideas. But I remembered that varint encoding could be used to reduce the storage space for integers if the number is small enough. And given that for most of our range we should have relatively small differences in the mantissa between each encoded result and that we could encode 3 bytes down to on average 2.2 bytes based on the stats I collected earlier, would that help? I used the integer-encoding crate which automatically does zig-zag encoding for signed integers, and used that on the difference of the mantissas only (xor’ing everything else).

3.6GB of space taken. Damn!

Conclusion⌗

I’m no compression expert, so there is probably some way to compress this better that I don’t know about. But even so - my original idea of just shipping these across platforms was a bad one it turns out. It’d have to get to below 500MB or thereabouts to make it feasible to ship this to multiple platforms (and you’d have to do it for every math function you wanted to test too!).

Editors suffix: The next thing I would try is based on this tweet, and use fpzip to try compress the numbers, thanks to Robin Green’s suggestion!